Since ChatGPT’s release, I’ve been inspired to combine this incredible chatbot technology with a robot to see what additional value could be created with robotic embodiment.

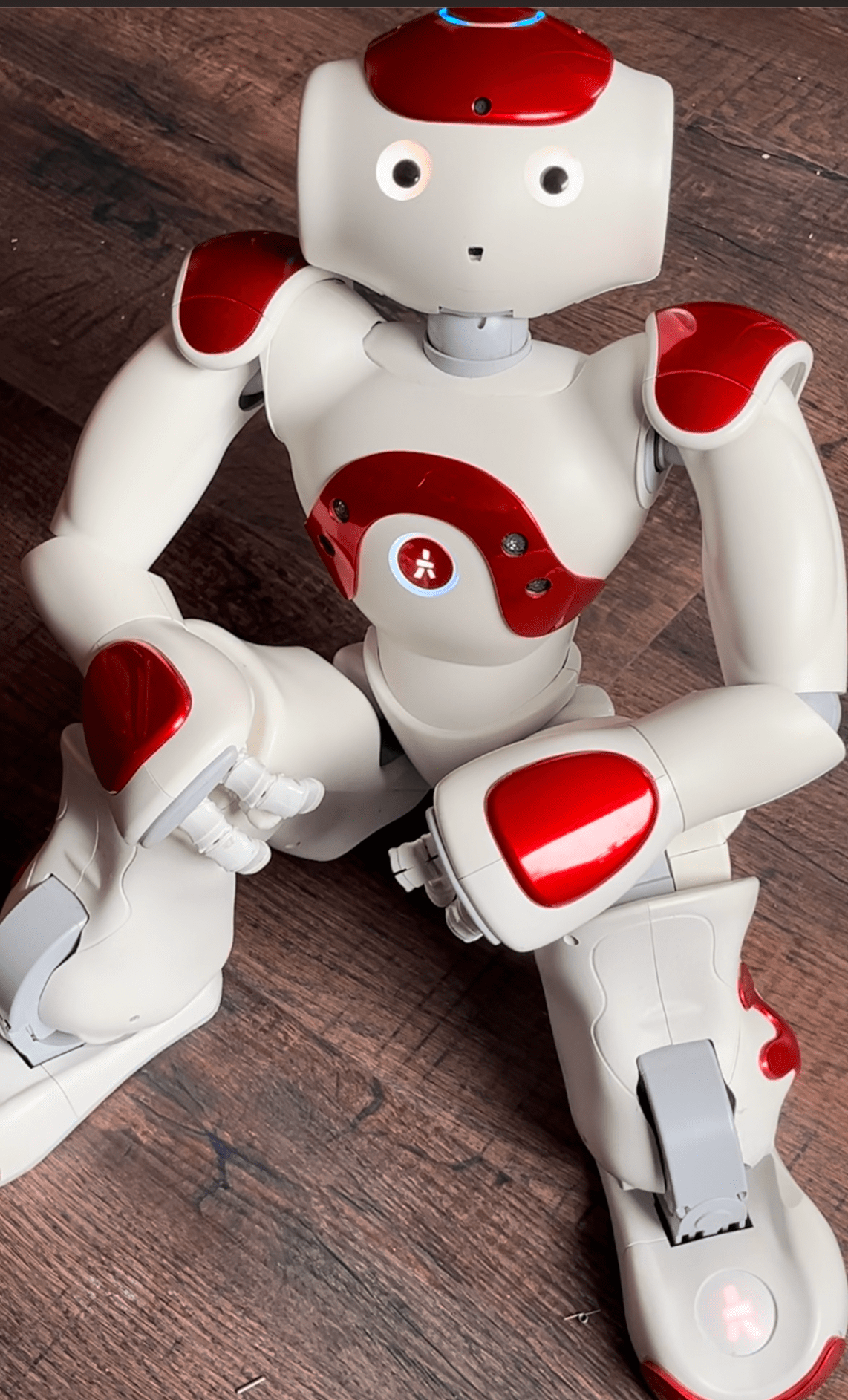

We have an Aldebaran Nao and an Anki Vector social robot – I decided to try Nao first because I had a working API project for for it, and with Vector there was still some work to do as we haven’t programmed with it since Anki, the manufacturer, went bankrupt.

The first problem I ran into was a mismatch in the python supported versions of OpenAI GPT and the Nao API. The ideal situation would have been to have one project to run Nao’s communication and the connection to GPT, but the Nao python api only runs on python 2.7 and the GPT api only runs on 3.0!

So I had to make a choice – since I had already been using the Nao API in Java, I decided to continue with Java and write a python server to wrap the OpenAI GPT calls. Now, I could have used the GPT API in Java but that code is not created and maintained by OpenAI themselves so I chose to just go with python.

I created two projects – you can see the code below:

Java Nao client

https://github.com/thoshamoodley/NaoGPTJavaProject/blob/main/src/NaoConnect.java

Python OpenAI GPT server

https://github.com/thoshamoodley/OpenAIConnect/blob/main/OpenAIConnectServer.py

The Java client sends an initiate request to the python server which then contacts GPT with this prompt or something similar:

[{"role": "system",

"content": "You are a humanoid robot with a kind disposition and a good sense of humor who always replies in 20 words or less. Start your conversation with 'I would like to talk about'"},

{"role": "user", "content": "Start a conversation about a random topic."}]

If you want to try this yourself, the OpenAI GPT getting started guide is all that you need.

The response from GPT needs to be returned to the Java code and the robot speaks the text out using Aldebaran API's ALTextToSpeech.

Then the robot records the user response using ALAudioRecorder, activating one of the 4 microphones available on Nao. I then have to move the audio file using SSH off Nao and onto the local disk so that the python server can access it. Then I send a second request to the python server to get GPT's response to the user input. The python server uses OpenAI to convert the .wav file from speech to text - it's really quite quick, too! Then I compose conversation context or history in python and that is sent to the GPT API - this is how it looks:

[{

'role': 'system', 'content': "You are a humanoid robot with a kind disposition and a good sense of humor who always replies in 20 words or less. Start your conversation with 'I would like to talk about'"},

{'role': 'user', 'content': 'Start a conversation about a random topic.'},

{'role': 'user', 'content': 'I like to see sparrows.'},

{'role': 'assistant', 'content': "Sparrows are delightful to watch! Their chirping adds music to everyday moments. Nature's small wonders bring joy."},

{'role': 'user', 'content': 'The blue tits is also a very beautiful bird.'},

{'role': 'assistant', 'content': "Yes, blue tits are stunning with their vibrant colors! Nature's palette shines bright with these charming feathered beauties."},

{'role': 'user', 'content': 'I also like parakeets.'

}]There is quite a sizeable delay caused by the GPT api. I also, for lack of a better method, spend 7 seconds recording the user response each round, as I could not find an event in the Aldebaran Nao API which would tell me when the user stopped speaking. Overall it leads to a large pause between when I stop speaking and when Nao responds. However I don’t think that spoils the experiment too much. You can still see how sentient Nao appears to be with this new fluent chatbot interaction – an improvement because you can now speak about anything. I did notice though, after many debugging rounds, that the subjects of the conversation chosen by GPT tended to repeat and group around certain topics, for example space exploration.

Overall, I’m pleased with the project outcome. It’s always nice to see our old Nao serving the purpose that we initially bought him for – to do robot research on. It’s amazing to be able to use current AI technology and see how far it has come in emulating sentience by pairing it with an embodied robot.

That just goes to show you that the ‘brain’ of a robot can always be improving and that the body can prove to be useful for much longer with improvements in AI. And this at a time when humanoid robots seem to be taking a giant leap forward. Consider Figure 01, the humanoid robot which runs OpenAI code to allow it to respond logically and contextually to what it senses in its environment. It can even explain why it’s taken specific actions. It’s truly amazing!

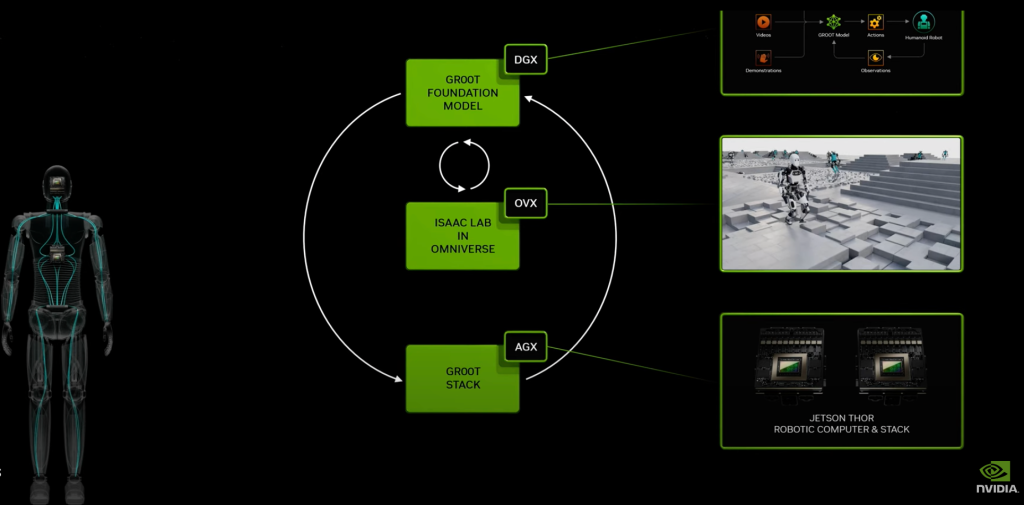

Consider also NVIDIA’s recent GTC announcement of project GROOT – a foundational model specifically developed for humanoid robots to understand natural language and learn via simulation at high capacity with thousands of parallel simulations being run.

NVIDIA GROOT ISAAC stack for smoother humanoid robot integration

Skip to around 1h50 in the GTC keynote below:

Lastly, let’s look quickly at Tesla’s Optimus Gen 2 which shows incredibly sensitive movement capability.

That rounds up this detour into the progress of AI combined with humanoid robotics. I look forward to a future in which these intelligent humanoid robots can play a meaningful role in our society.

Leave a comment